Function Calling Tutorial with gpt-3.5-turbo-0613 and gpt-4-0613

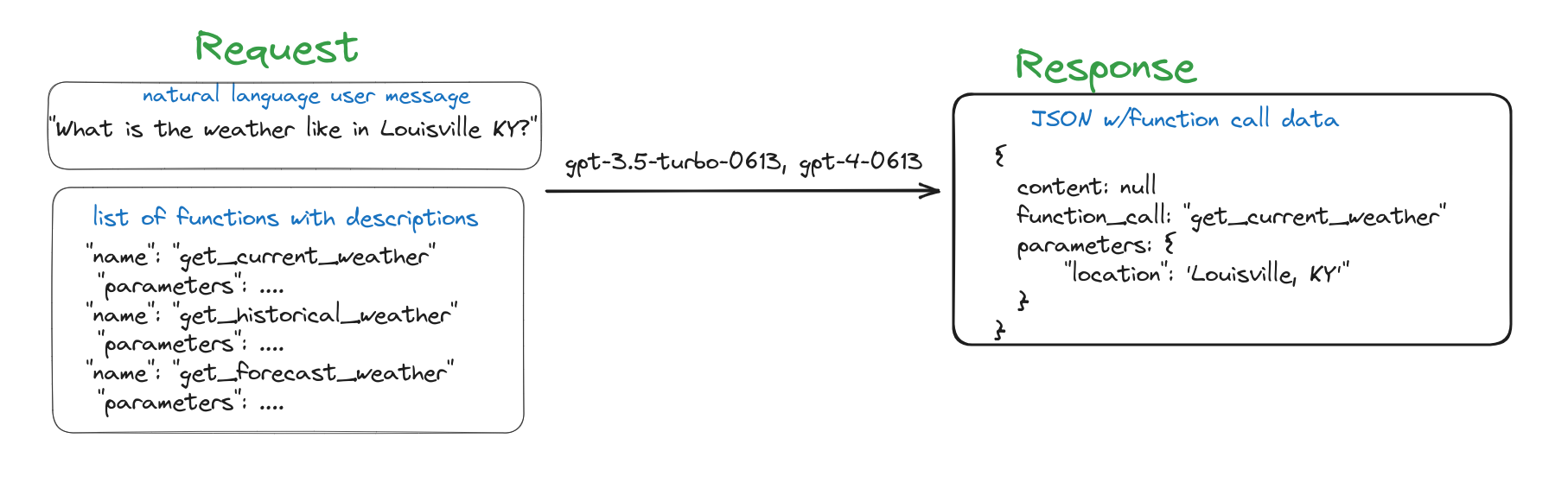

OpenAI’s gpt-3.5-turbo-0613 and gpt-4-0613 models are fine-tuned to detect when a function needs to be called based on user input and how to respond with proper JSON.

These models enable developers to create ChatGPT plugin functionality that can be used outside of the ChatGPT web app. This tutorial will show you how to use OpenAI’s gpt-3.5-turbo-0613 and gpt-4-0613 models to develop with the function calling feature.

In this tutorial, we will go over the following:

What is Function Calling

Function calling enables programmers to consistently retrieve structured data from OpenAI’s GPT models. OpenAI isn’t executing the function for you, but it returns a JSON object with the function name it thinks should be called and the arguments to pass to the function.

It looks like this:

This gives programmers the ability to:

-

Create Plugin like functionality that can be used outside of the ChatGPT web app

- Transform inquiries like “Message Ravi to see if he can join for lunch next Wednesday” into a command like

send_message(recipient: string, content: string), or “How’s the temperature in New York?” toget_present_temperature(location: string, scale: 'celsius' | 'fahrenheit').

- Transform inquiries like “Message Ravi to see if he can join for lunch next Wednesday” into a command like

-

Convert natural language into API requests or database queries

- Change “Who are my top five clients this week?” to a private API request such as

get_clients_by_income(start_period: string, end_period: string, cap: int), or “How many transactions did Delta Corp make last week?” to a SQL request utilizingsql_request(request: string).

- Change “Who are my top five clients this week?” to a private API request such as

-

Extract structured data from text

- Create a function called

extract_entity_data(entities: [{name: string, birthdate: string, place: string}]), to draw out all entities cited in an Encyclopedia Britannica entry.

- Create a function called

How to Use Function Calling

Let’s walk through an example of how to use function calling with the new gpt-3.5-turbo-0613 and gpt-4-0613 models in Python.

Step 1: Install the OpenAI Python Client

Install the OpenAI Python client with pip install openai.

Step 2: Create a ChatGPT API Key

Create an environment variable called OPENAI_API_KEY set to your OpenAI API key.

Follow our OpenAI API key tutorial if you need help.

Step 3: Create a New Python File

Create a new Python file called function_calling.py and add the following code:

import openai

import json

# Example dummy function hard coded to return the same weather

# In production, this could be your backend API or an external API

def get_current_weather(location, unit="fahrenheit"):

"""Get the current weather in a given location"""

weather_info = {

"location": location,

"temperature": "72",

"unit": unit,

"forecast": ["sunny", "windy"],

}

return json.dumps(weather_info)

messages = [{"role": "user", "content": "What's the weather like in Louisville?"}]

functions = [

{

"name": "get_current_weather",

"description": "Get the current weather in a given location",

"parameters": {

"type": "object",

"properties": {

"location": {

"type": "string",

"description": "The city and state, e.g. San Francisco, CA",

},

"unit": {"type": "string", "enum": ["celsius", "fahrenheit"]},

},

"required": ["location"],

},

}

]

response = openai.ChatCompletion.create(

model="gpt-3.5-turbo-0613",

messages=messages,

functions=functions,

function_call="auto", # auto is default, but we'll be explicit

)

print(response)This code works almost exactly as regular ChatGPT completions except for the functions and function_call parameters.

These functions tell the API what functions are available to call and how you want the API to make its decision.

I’ll explain the different function_call options in function_call Parameter Options.

I’ll explain how to generate the functions parameter in the Generate a Function Call JSON Schema section.

Step 4: Run the Python File

Run the code with

python3 function_calling.pyYou should see something like this:

{

"id": "chatcmpl-7T90jy4dhLwKgIxwI8zRkGNSdAKJc",

"object": "chat.completion",

"created": 1687180505,

"model": "gpt-3.5-turbo-0613",

"choices": [

{

"index": 0,

"message": {

"role": "assistant",

"content": null,

"function_call": {

"name": "get_current_weather",

"arguments": "{\n \"location\": \"Louisville, KY\"\n}"

}

},

"finish_reason": "function_call"

}

],

"usage": {

"prompt_tokens": 82,

"completion_tokens": 19,

"total_tokens": 101

}

}As you can see, the response contains a function_call object with the function name and arguments.

The API correctly identified that the user was asking for the weather in Louisville, KY and returned the function name get_current_weather and the argument {"location": "Louisville, KY"}.

Step 5: Call the Function

Now that we have the function name and arguments, we can call the function.

Add the following code to the end of function_calling.py:

# ... Previous code

response_message = response["choices"][0]["message"]

function_args = json.loads(response_message["function_call"]["arguments"])

# Note: the JSON response may not always be valid; be sure to handle errors

available_functions = {

"get_current_weather": get_current_weather,

} # only one function in this example, but you can have multiple

function_name = response_message["function_call"]["name"]

fuction_to_call = available_functions[function_name]

function_args = json.loads(response_message["function_call"]["arguments"])

function_response = fuction_to_call(

location=function_args.get("location"),

unit=function_args.get("unit"),

)

print(function_response)Run the code and you should see the following output:

{

"location": "Louisville, KY",

"temperature": "72",

"unit": "fahrenheit",

"forecast": [

"sunny",

"windy"

]

}Congratulations! You’ve successfully used the new gpt-3.5-turbo-0613 and gpt-4-0613 models to call a function.

You can add more functions to the available_functions dictionary to give the API more options to choose from.

Diving Deeper

function_call Parameter Options

You may have noticed that we passed the function_call parameter as auto in the openai.ChatCompletion.create call.

The function_call parameter controls how the model responds to function calls.

-

“none” means the model does not call a function, and responds to the end-user.

- The API will generate a regular ChatGPT completion instead of suggesting a function call.

- The regular response will be inside the “content” field of the response message.

-

“auto” means the model can pick between an a regular completion or calling a function.

- The API will generate a regular ChatGPT completion if it thinks that is the best response or suggest a function call if it thinks that is the best response.

-

Specifying a particular function via

{"name": "my_function"}forces the model to call that function.- You can use this when you know the function should be called and don’t want to rely on the model to decide.

“none” is the default when no functions are present.

“auto” is the default if functions are present.

Multiple Functions

You can emulate multiple function calls with a single request.

Problem

Imagine that you have a function called get_Weather that returns the weather for a given location and a function called get_Events that returns events for a given location and date.

A user might ask:

What events should I go to in San Francisco on May 25th 2023 based on the weather?

The user is asking for two things:

- The weather in San Francisco on May 25th 2023

- Events in San Francisco on May 25th 2023

You will need to hit the API twice to get the weather and events.

You could make one request with function_call set to {"name":\ "get_Weather"} and another request with function_call set to {"name":\ "get_Events"}.

Solution

If you craft your JSON Schema as if you had a single function that calls both, you can make a single request and get both the weather and events.

Pretend you have a function called multi_Func that calls get_Weather and get_Events.

The parameters for multi_Func would be the parameters for get_Weather and get_Events.

Here is the JSON Schema that you can use for this:

{

"name": "multi_Func",

"description": "Call two functions in one call",

"parameters": {

"type": "object",

"properties": {

"get_Weather": {

"name": "get_Weather",

"description": "Get the weather for the location.",

"parameters": {

"type": "object",

"properties": {

"location": {

"type": "string",

"description": "The city and state, e.g. San Francisco, CA"

}

},

"required": ["location"]

}

},

"get_Events": {

"name": "get_Events",

"description": "Get events for the location at specified date",

"parameters": {

"type": "object",

"properties": {

"location": {

"type": "string",

"description": "The city and state, e.g. San Francisco, CA"

},

"date": {

"type": "string",

"description": "The date of the event, e.g. 2021-01-01."

}

},

"required": ["location","date"]

}

}

},

"required": ["get_Weather", "get_Events"]

}

}Using this JSON Schema, you can make a single request with function_call set to {"name":\ "multi_Func"}.

The result will be a JSON object with the parameters you need to call get_Weather and get_Events.

Any Structured Data / Non-existent Functions

As shown in the multiple functions section, you don’t need to have a function that exists in order to use function calling.

You can use function calling to get structured data from any text by creating a dummy function that accepts the data you want to extract as parameters.

Let’s say you want to extract the data from a Wikipedia article about the Golden Gate Bridge: The first sentence of the article is:

The Golden Gate Bridge is a suspension bridge spanning the Golden Gate, the one-mile-wide (1.6 km) strait connecting San Francisco Bay and the Pacific Ocean.

You can create a dummy function called process_proper_nouns that accepts a list of proper nouns as a parameter.

I generated a JSON Schema for this function using ChatGPT:

Made up function:

def process_proper_nouns(nouns):

return process(nouns)Output JSON Schema from ChatGPT prompt:

{

"name": "process_proper_nouns",

"description": "Process an array of proper nouns",

"parameters": {

"type": "object",

"properties": {

"nouns": {

"type": "array",

"items": {"type": "string"},

"description": "Array of proper nouns to be processed"

}

},

"required": ["nouns"]

}

}By passing the first sentence of the Wikipedia article as the prompt with function_call set to {"name": "process_proper_nouns"},

The gpt-3.5-turbo-0613 model suggests the following function call:

function_call: {

"name": "process_proper_nouns",

"arguments": "{\n \"nouns\": [\"Golden Gate Bridge\", \"Golden Gate\", \"San Francisco Bay\", \"Pacific Ocean\"]\n}"

}The API correctly identified the proper nouns in the sentence and returned them as the arguments to the process_proper_nouns function.

When using this technique, you should always specify the function name in function_call to ensure the API returns structured data.

Generate a Function Call JSON Schema

Here is a ChatGPT prompt you can use to generate a function call description and parameters:

Here is an example JSON Schema for a function:

{

"name": "get_current_weather",

"description": "Get the current weather in a given location",

"parameters": {

"type": "object",

"properties": {

"location": {

"type": "string",

"description": "The city and state, e.g. San Francisco, CA",

},

"unit": {"type": "string", "enum": ["celsius", "fahrenheit"]},

},

"required": ["location"],

},

}

This is for the following function:

def get_current_weather(location, unit="fahrenheit"):

"""Get the current weather in a given location"""

weather_info = {

"location": location,

"temperature": "72",

"unit": unit,

"forecast": ["sunny", "windy"],

}

return json.dumps(weather_info)

Given this example please generate a JSON Schema for the following function:

{INSERT YOUR FUNCTION}Replace your function with {INSERT YOUR FUNCTION} and run the prompt.

Troubleshooting

Some people have found that the gpt-3.5-turbo-0613 and gpt-4-0613 models erroneously suggests a function call

python when that is not a valid choice.

The API is hallucinating the python function call’s existence.

Some potential solutions are:

- Specify a function to call with

function_callinstead of usingauto. - Add a dummy

pythonfunction to yourfunctionslist with a description like “This function does not exist”. - Try using the

gpt-4-0613model. - Try variations of your function names and descriptions.

Conclusion

OpenAI’s gpt-3.5-turbo-0613 and gpt-4-0613 models generate structured JSON responses that can be used to call functions. This enables developers to create ChatGPT plugin functionality anywhere.

By using the function_call parameter, you can control how the API responds to function calls.

You can use function calling to extract structured data from text by creating a dummy function that accepts the data you want to extract as parameters.

You can generate a JSON Schema for a function by using the Generate a Function Call JSON Schema prompt.

If you have any questions, feel free to join our Discord.

Enjoyed this post?

Subscribe for more!

Get updates on new content, exclusive offers, and exclusive materials by subscribing to our newsletter.